Garbage In, Model Out: Data Quality Matters for LLM Pretraining

Jan 5th, 2025

The capabilities of large language models (LLMs) have advanced rapidly in recent years. Their improvement can be primarily attributed to progress along three broad directions: scale, algorithms, and the quality of pretraining data. Post-training approaches such as instruction fine-tuning and DPO also have played a very significant role in creating useful LLMs. But in this post, we will only focus on the pretraining phase and the performance of non-fine-tuned base models.

Scale – in terms of the amount of training data and model size – has increased massively following the discovery of scaling laws , with models growing by up to 4 orders of magnitude while being trained on up to 2 orders of magnitude more tokens. In fact, scale has been the main driver of the impressive performance improvements LLMs have experienced in the last years.

Algorithms, by comparison, a category under which I count model architecture and training approaches, have remained relatively similar to those used by the work that originally proposed them . There have been advances, especially wrt. more efficient attention implementations and better positional encoding schemes . But by and large, models still follow the same basic structure, and algorithmic improvements have primarily targeted efficiency, ultimately helping with scaling.

The improvements that can be achieved with both scale and algorithms are approaching their limits. Further scaling is hindered by a scarcity of high quality training data , and by the enormous financial and technical costs of training runs and inference. While I believe that new algorithmic approaches are essential and will ultimately be the key to fundamentally better models, progress in this area is unpredictable and much harder to control than scaling. This leaves us with a third direction of improvement: increasing the quality of pretraining data.

Quality Issues in Pretraining Datasets

LLMs are typically pretrained on massive datasets, such as The Pile (207B tokens) , Red Pyjama (30.4T tokens) , FineWeb (15T tokens) and The Stack (900B tokens) . These datasets combine different sources of text, such as Wikipedia, papers from e.g. Arxiv, books, StackExchange, GitHub, and Common Crawl (a large scale crawl of the public web). But the scale and diversity of these datasets makes quality control very challenging.

Montville Reformed Church Graveyard Gravemarker Photo # 9/P1010129 Index A B C D E F G H I J K L M N O P Q R S T V W Y Z Related Links To Outside Resources:

« Prev Next » Sun Mon Tue Wed Thu Fri Sat 28 29 30 31 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 Cancelled: Stories in the Woods 11/15/2012 - 11:00am to 11:45am Turkey Party 11/17/2012 - 11:00am to 12:00pm 18 19 20 21 22 23 24 Ambler Lego Club 11/19/2012 - 4:30pm to 5:30pm Family Movie 11/20/2012 - 7:00pm to 8:30pm 25 26 27 28 29 30 1 Home

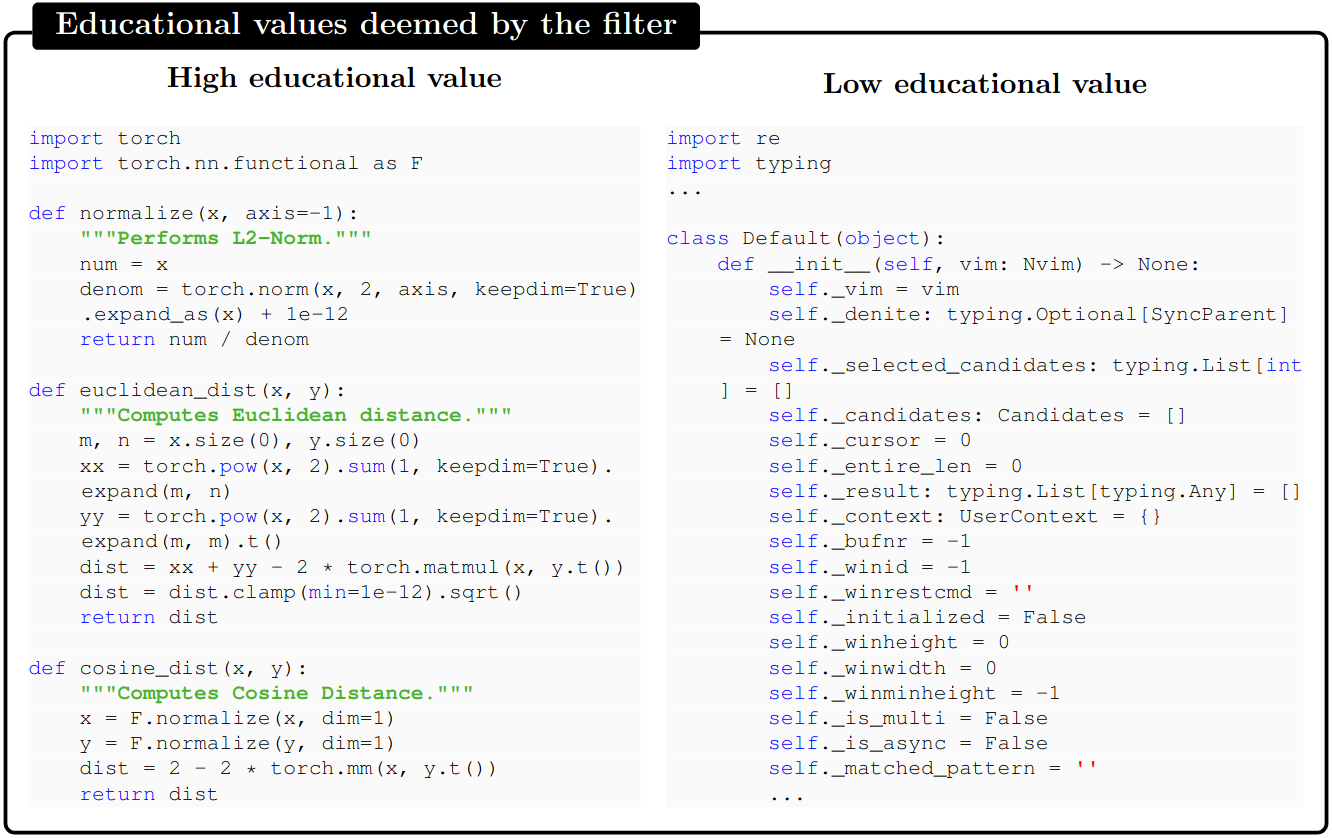

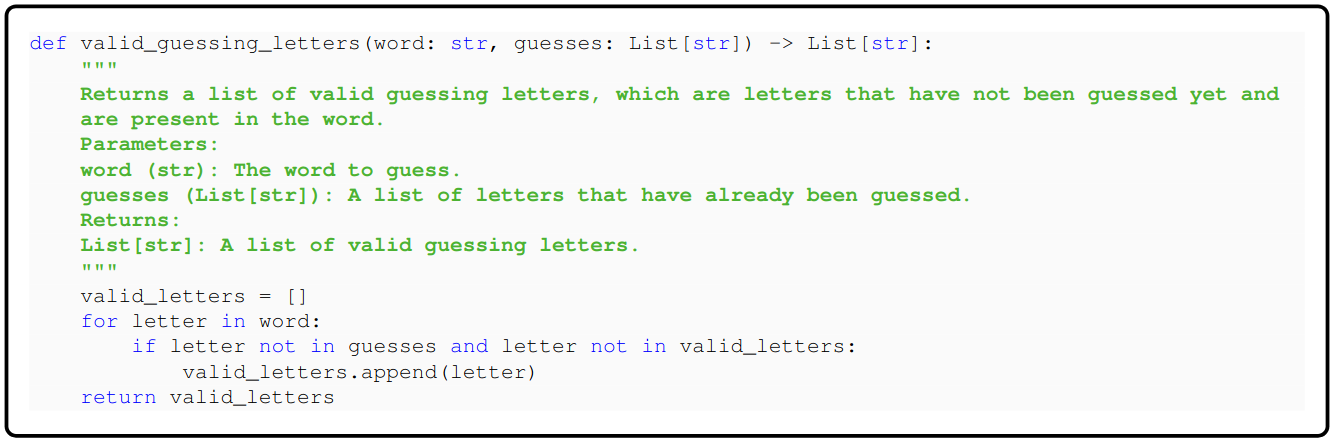

Data quality problems manifest in multiple ways. Text can be repetitive, lack meaningful content, and documents and text snippets can be duplicated, such as licenses or text templates appearing many times . When the researchers behind the "Textbooks Are All You Need" (Textbooks) paper conducted a manual inspection of code samples in The Stack , they found that many samples had what they termed "low educational value". For instance, many code samples weren't self-contained, relying on imported modules that weren't visible to the model and making it hard to understand the semantics. Other samples were dominated by trivial boilerplate code or configuration definitions rather than substantive algorithmic logic. When algorithmic logic was present, it was often buried in complex, poorly documented functions. Moreover, the distribution of topics and coding concepts was heavily skewed, leading to potential blind spots in the model's understanding.

To train better LLMs without scaling up model or dataset sizes even further, and without relying on advancements in algorithms, it is therefore critical to improve the quality of the training data. Next we will explore how that can be done, first for basic English language proficiency, and then in more applied settings.

Making Small Language Models Speak Coherent English with Tiny Stories

The paper "TinyStories: How Small Can Language Models Be and Still Speak Coherent English?" demonstrates how data quality can dramatically impact model performance. Setting aside more complex abilities like arithmetic and translation, the authors argue that the core ability of language models should be to produce correct, coherent, meaningful, and diverse English text. Writing text with these qualities requires three essential skills: grammatical and lexical knowledge, basic factual knowledge, and contextual reasoning. To study these abilities, the researchers chose the language capabilities of a 3-4 year old child as reference point, arguing that this represents the simplest form of language that requires all three core skills. For instance, consider these examples:

Jack was hungry, so he went looking for _

grammar + factual information

Lily wanted to get either a cat or a dog. Her mother didn't let her get a dog so instead she _

grammar + reasoning

Small Language Models Struggle with Basic Language Generation

When the researchers tested whether small language models (SLMs) could complete simple children's stories, they found that despite the stories' seeming simplicity, SLMs struggle to produce meaningful and coherent completions:

Prompt

Tom and Jane are friends. One day, Jane goes to Tom’s house. Tom has a big pot of soup. He wants to share it with Jane. “Jane, do you want some soup?” Tom asks. “Yes, please. It looks yummy,” Jane says. Tom pours some soup into two bowls. He gives one bowl to Jane. Jane takes a spoonful of soup, but then she makes a face. The soup is

GPT2-S (137M) completion

very good. Tom gives Jane a small bowl of soup. Jane takes a spoonful of soup. Tom gives Jane a small bowl of soup. Jane takes a spoonful of soup. Tom gives Jane a small bowl of soup. Jane takes a spoonful of soup. …

Pythia-410M completion

too hot. She takes a spoonful of soup and then she takes a spoonful of soup. She takes a spoonful of soup and then she takes a spoonful of soup. …

GPT2-XL (1.5B) completion

too old. Tom scowls. “Don’t be that way! Your soup has to be fresh like your daughter,” Tom says, angry and disappointed. “Bring me some more,” Jane cries. “No! Go away, I like my soup old and fresh!”

28M param model trained on TinyStories completion

very bitter. She does not like it. She says, “I don’t like this soup. It is too bitter.” Tom is sorry. He says, “I’m sorry, Jane. I didn’t know you don’t like bitter soup. I will make you something else.” He looks around the kitchen and finds some bread and cheese. He puts them on the table and says, “Here, Jane. You can have some bread and cheese. They are not bitter. They are sweet and yummy.” Jane is happy. She says, “Thank you, Tom. You are a good friend. I like bread and cheese. They are not bitter.”

Evaluating and Training Better SLMs with TinyStories

The central question of the TinyStories work is: Are large models and large amounts of training data fundamentally needed to achieve basic language generation abilities, or can small models and training datasets also get the job done?

To answer this question, the researchers created the TinyStories dataset, consisting of 2.12 million short English stories calibrated to a 3-year-old's comprehension level, generated by GPT-3.5 and GPT-4. The main challenge in creating TinyStories was to ensure diversity. Repeatedly prompting an LLM to generate stories with different random seeds is not enough, since the outputs would be repetitive. Higher sampling temperature can increase diversity, but also risks deteriorating quality.

The solution was to prompt the GPT models to generate stories while varying the details of each prompt. To do that, the TinyStories authors curated a vocabulary of 1,500 simple words, consisting of verbs, nouns, and adjectives. For each story, they randomly selected three words (one from each category) and combined them with randomly selected story features like dialogue, bad ending, moral value, plot twist, foreshadowing or conflict. Then they prompted the GPT models to generate a story based on these constraints. They also create an instruction variant of the dataset to fine-tune models for instruction-following, but we will focus on pretraining here.

Prompt: Write a short story (3-5 paragraphs) which only uses very simple words that a 3 year old child would likely understand. The story should use the verb ”decorate”, the noun ”thunder” and the adjective ”ancient”. The story should have the following features: the story should contain at least one dialogue, the story has a bad ending. Remember to only use simple words!

Story: Once upon a time, in an ancient house, there lived a girl named Lily. She loved to decorate her room with pretty things. One day, she found a big box in the attic. She opened it and saw many shiny decorations. Lily was very happy and decided to use them in her room.

As Lily was decorating her room, the sky outside became dark. There was a loud thunder sound, and Lily got scared. She ran to her mom and said, ”Mommy, the thunder is so loud!” Her mom hugged her and said, ”Don’t worry, it will pass soon.” But the thunder did not stop. It got louder and louder, and the ancient house started to shake. Suddenly, the roof fell down on the pretty decorations. Lily was sad because her room was not pretty anymore. The end.

Evaluation: Models trained on TinyStories are evaluated by asking them to complete the beginning of a story. The main evaluation criteria are grammar, creativity and consistency with the story's beginning. To judge whether models have acquired these abilities, the authors deviated from traditional LLM benchmark approaches, such as MMLU and HellaSwag . They argue that the structured nature of these benchmarks, where models under test typically answer multiple-choice questions, is not suitable for the evaluation criteria here. E.g. it is not clear how to evaluate creativity with a multiple-choice question. Instead, the authors use an LLM-as-a-judge approach and ask GPT-4 to grade model responses based on the three criteria, as shown below:

When they got outside, they saw a big storm coming. The wind was blowing hard, and the rain was pouring down. Lily, her mom, and her dad knew they had to find a safe place to hide. They found a big tree and hid under it. The storm passed, and the sun came out again. Lily, her mom, and her dad were all safe and warm inside their ancient house.

The grammar is generally correct, but there are a few minor errors: ⟨list omitted⟩.

Overall, the student’s completion of the story demonstrates adequate language abilities and creativity, but could benefit from better integration of the shiny decorations and minor grammar improvements.

Training and models: To test whether small models can acquire the desired linguistic abilities when trained on the right data, the authors trained several SLMs, ranging from 1M to 80M parameters with variations in the embedding dimension (width) and the number of transformer blocks (depth), on the TinyStories dataset.

Results

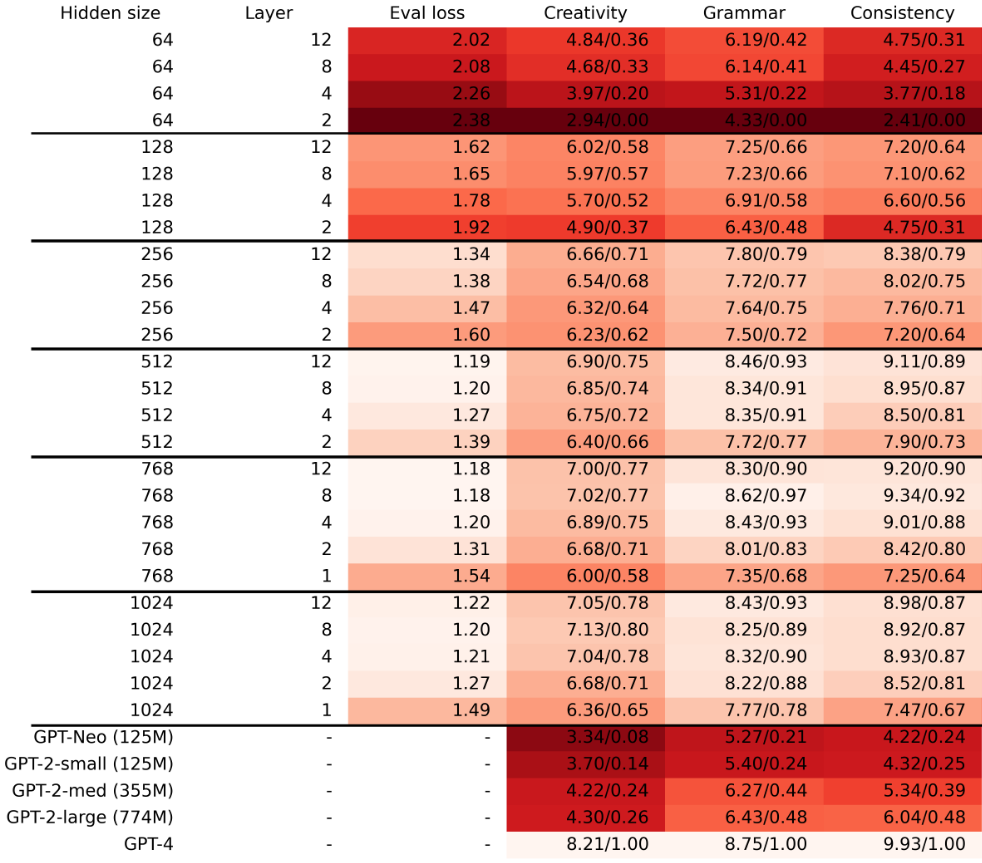

Figure 7 shows how models with different widths and depths compare across the three evaluation criteria of grammar, consistency and creativity. Grammar appears to be the easiest skill to acquire, with both narrow and shallow models achieving relatively high scores. Consistency needs at least 128 embedding dimensions to be learned well, and a somewhat deeper model, at least if it is also narrow. Creativity seems to be the hardest skill to acquire, with none of the models matching GPT-4's results. Though one might question whether GPT-4-based judgments are really appropriate to evaluate creativity, particularly when it is applied to itself, with LLMs being known to prefer their own outputs .

Overall, the results show that (very) small models, between 1M and 80M parameters, can outperform much larger ones, with 125M and more parameters, when they are trained on focused, high-quality data. Notably, each of these models can be trained on a single V100 GPU in 30 hours or less.

While the simple language setting that these results were obtained in is admittedly somewhat of a toy problem, we will see next that the same principles also hold in more practical tasks.

Data Quality in Practice

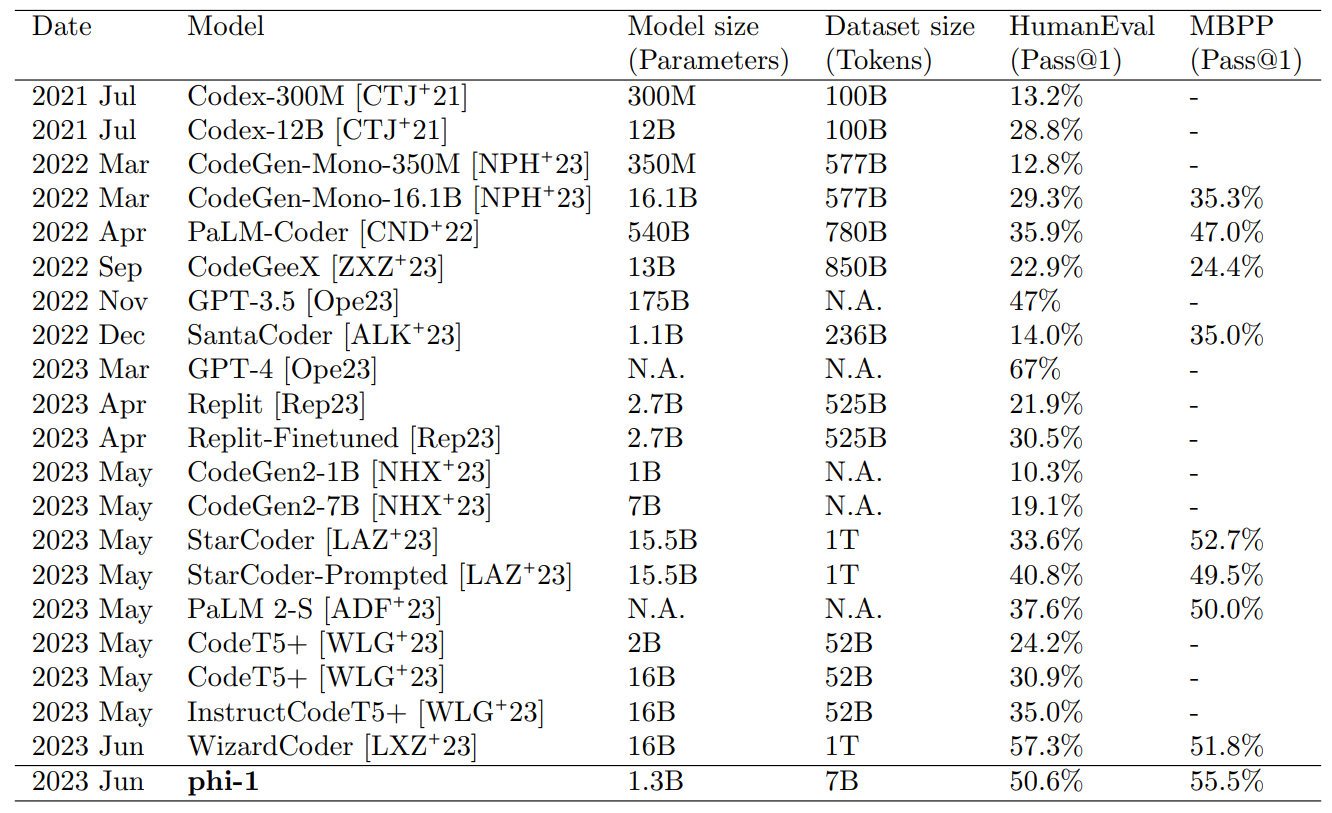

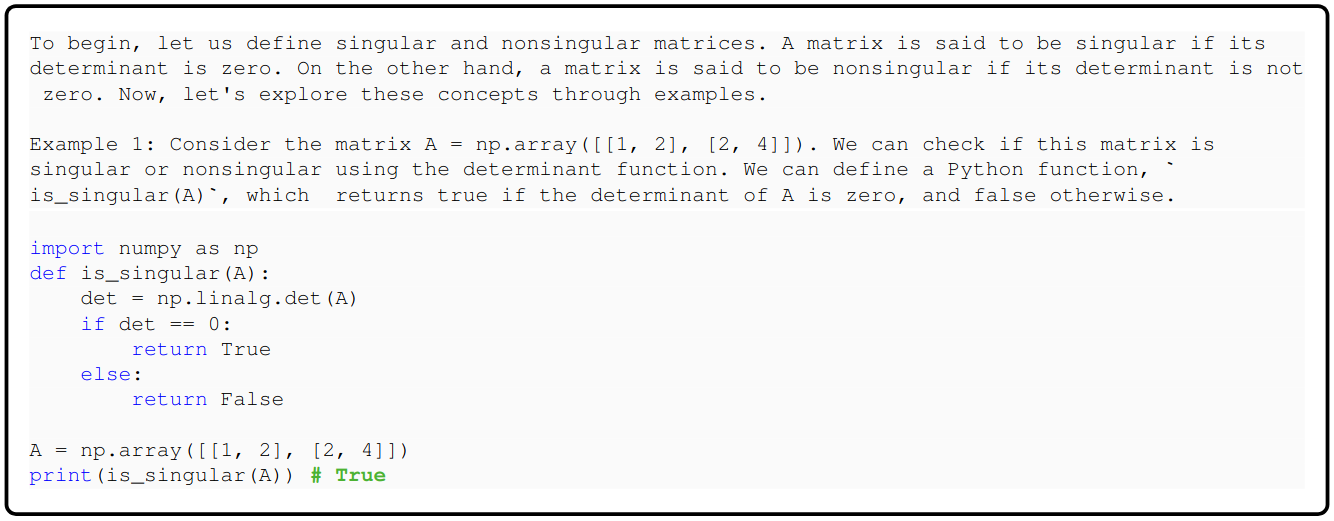

The TinyStories work demonstrates that a) data quality can dramatically impact model performance, potentially more than scale in terms of model size and data quantity, and that b) high quality data can be generated synthetically by larger models. Building on these insights, the "Textbooks is All You Need" (Textbooks) paper scaled up the approach to create practically useful models for Python coding. The authors trained Phi-1, a model that – compared to most other good LLMs at the time (June 2023) - was small with only 1.3B parameters and trained on much less data (8 epochs of 7B tokens), while still outperforming many of those larger models in coding tasks.

The success of Phi-1 and the Textbooks work led to a series of follow-on models, Phi-1.5 , Phi-2, Phi-3 , and Phi-4 , and to a larger emphasis on data quality in other work as well, with recent models like Llama3 putting more emphasis on high-quality data data . But how exactly do we obtain high-quality data in practice?

The Phi-1 Dataset

The secret sauce that made Phi-1 successful, despite its comparatively low scale, are the three datasets used to train it. First, the authors filtered the Python portions of The Stack v1 and the StackOverflow datasets from 35B tokens down to a subset of 6B "instructive" tokens. The filtering process involved using GPT-4 to annotate 100K documents for their "educational value" and then training a random forest classifier on their Codegen embeddings to extend these ratings to the full dataset. See Figure 3 for an examples of low and high educational value samples.

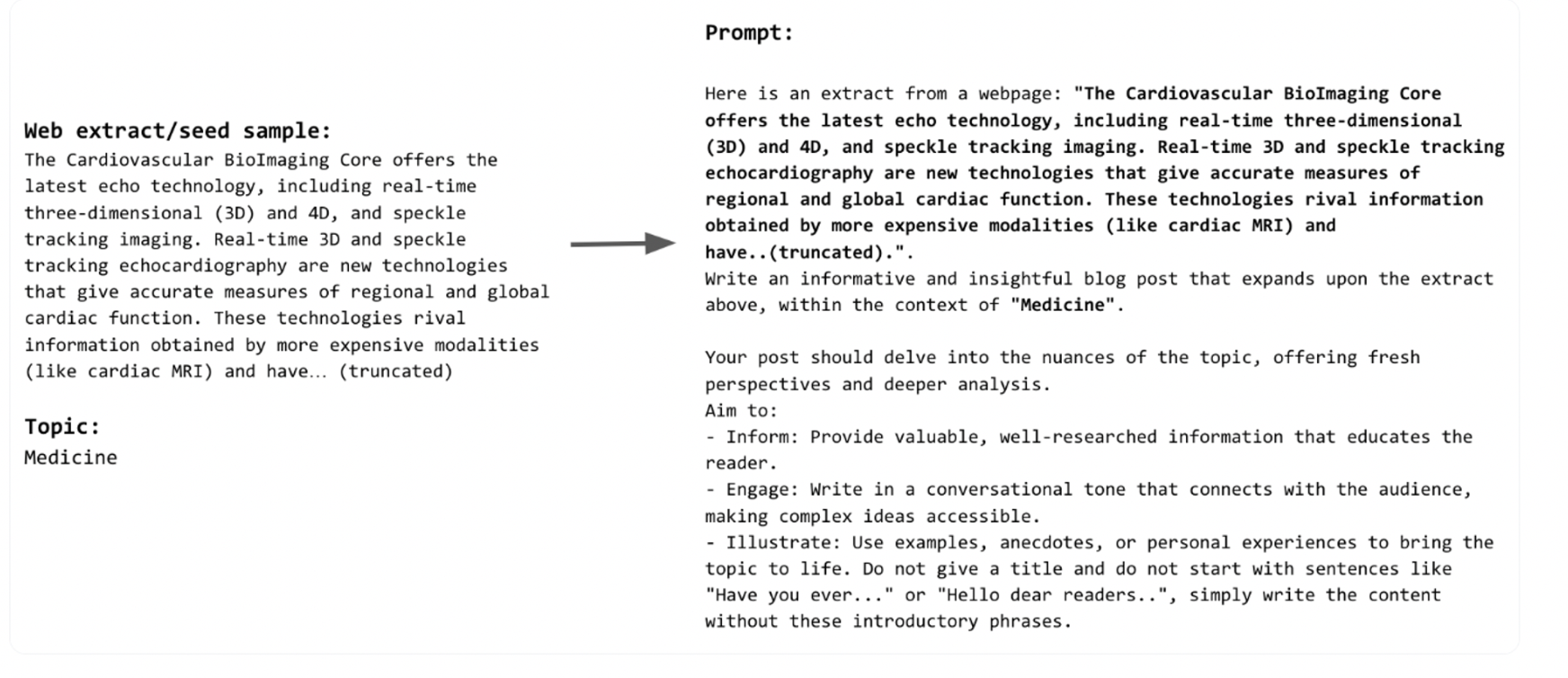

Second, the authors generated a synthetic "textbook" dataset of less than 1B tokens, using GPT-3.5. They achieved diversity by prompting GPT-3.5 to generate articles on different topics and for different audiences. Together, the filtered and generated data comprise the CodeTextbook dataset used for pretraining.

Finally, the authors generated the CodeExercises dataset for post-training/fine-tuning, consisting of 180M tokens of Python exercises generated by GPT-3.5. Each exercise consists of a Python function and its docstring, along with a completion for the function body that the model must learn. Despite CodeExercises being a rather small dataset, fine-tuning on it after pretarining on CodeTextbooks significantly improved coding-related model capabilities. More recent work, such as Llama3 , is now also using similar "annealing" techniques, where models are trained on high-quality code and math data at the end of pretraining.

As with many recent ML projects, e.g. GPT, once they start to become commercially viable, they tend to become increasingly closed. Therefore, while the quality-focused approach of the Textbooks paper proved successful, this success also came with less openness about the methodological details of the work, compared to its TinyStories predecessor. Model weights for Phi-1 and its successors are openly available, but the training dataset and its curation code, including the prompts and topics for filtering and data generation, are not.

SmolLM - An Open Phi Reproduction

Enter SmolLM , Huggingface's open reproduction of the Phi approach. SmolLM models were trained on a collection of three openly available datasets:

- Cosmopedia v2: a synthetic 28B token textbook collection generated by Mixtral

- Python-Edu: 4B tokens of educational Python samples filtered from The Stack

- FineWeb-Edu: 220B tokens of educational web samples filtered from deduplicated FineWeb

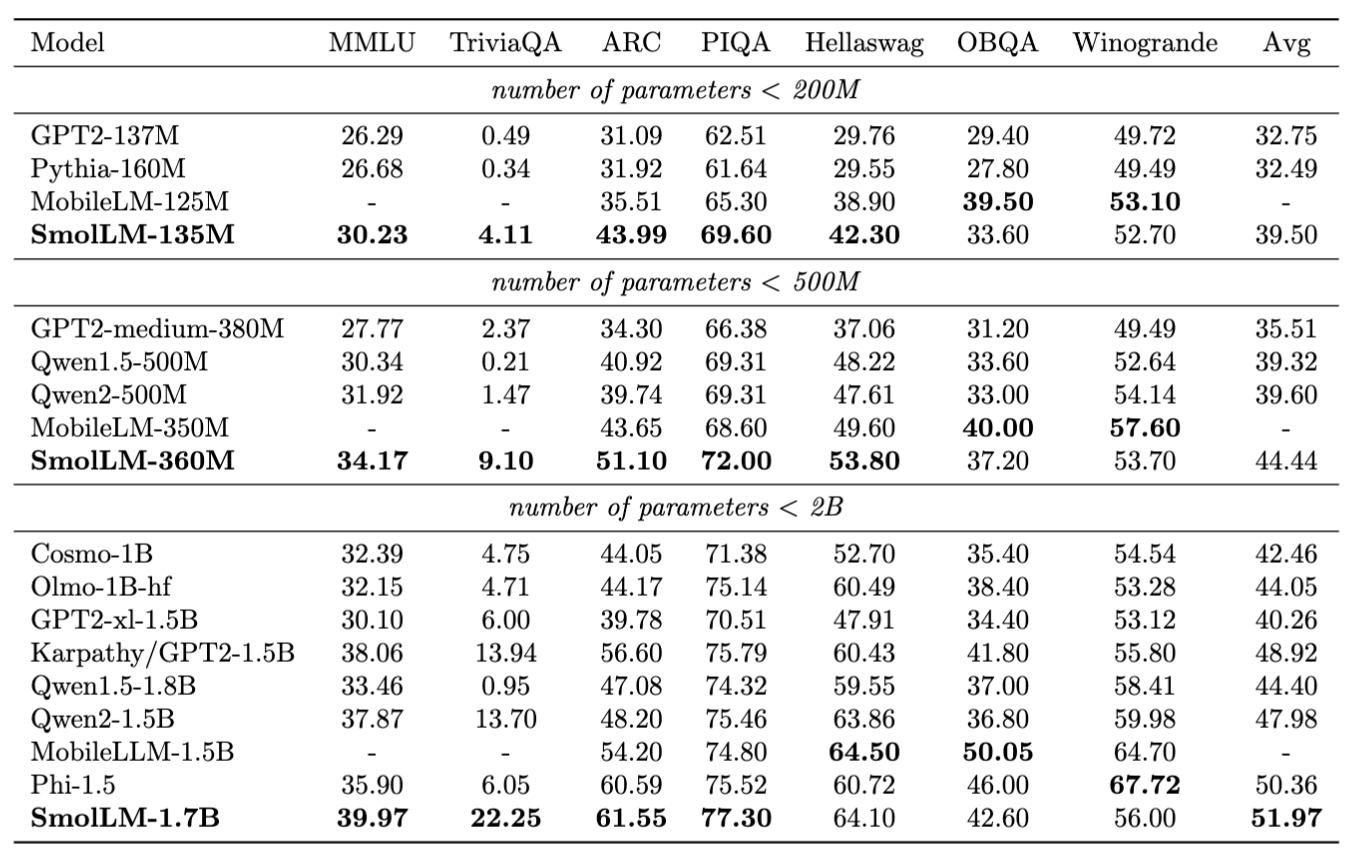

With this approach, SmolLM models achieve competitive performance compared to other SLMs of similar size:

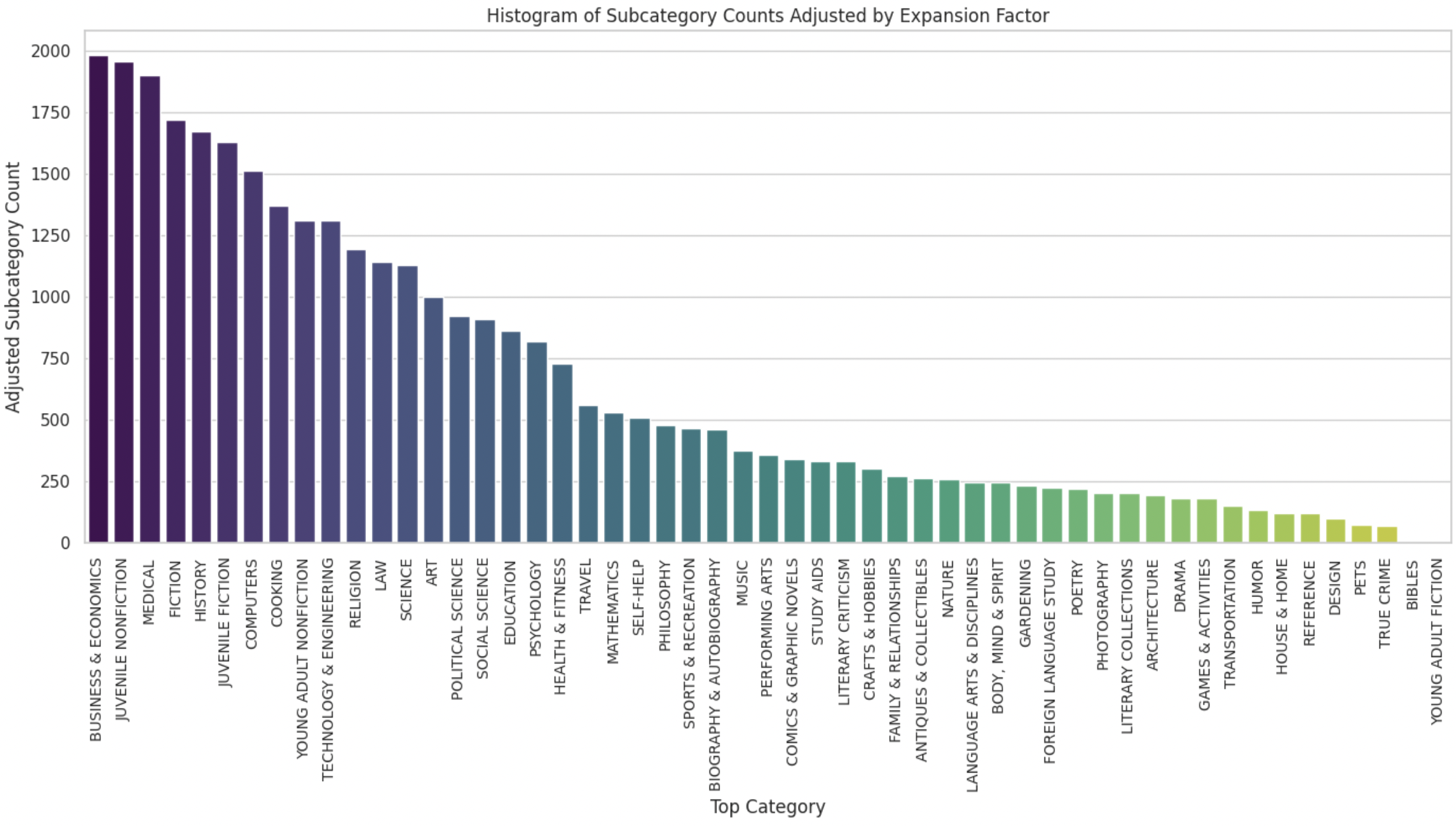

Generating Cosmopedia v2: Cosmopedia v2 consists of textbook articles generated by prompting Mixtral-8x7B-Instruct. To ensure diversity, the authors sampled web documents from a curated set of 5000 topics grouped under 51 categories from FineWeb, and used them as seeds for the prompts. The topic distribution and prompting process are shown below:

Filtering web and code datasets: The filtering process for FineWeb-Edu and Python-Edu uses an educational value classifier trained on Llama3-70B-Instruct annotations. The classifier uses a classification head on top of Snowflake-arctic-embed embeddings trained on approximately 500K samples per dataset.

Conclusion

The evidence is clear: by prioritizing data quality over quantity, we can train smaller yet more capable models on less data. This quality-first approach can be implemented through two main strategies: filtering web-scale datasets based on educational value, and generating synthetic data using larger models.

Many of the practices discussed here have now become standard for LLM training, such as a strong focus on data quality, using larger models to filter and generate the training data of smaller ones, and an annealing stage with high-quality data at the end of pretraining.

References

- [1] Training language models to follow instructions with human feedback - Long Ouyang, Jeff Wu, Xu Jiang, Diogo Almeida, Carroll L. Wainwright, Pamela Mishkin, Chong Zhang, Sandhini Agarwal, Katarina Slama, Alex Ray, others

- [2] Direct Preference Optimization: Your Language Model is Secretly a Reward Model - Rafael Rafailov, Archit Sharma, Eric Mitchell, Stefano Ermon, Christopher D. Manning, Celsea Finn

- [3] Scaling Laws for Neural Language Models - Jared Kaplan, Sam McCandlish, Tom Henighan, Tom B. Brown, Benjamin Chess, Rewon Child, Scott Gray, Alec Radford, Jeffrey Wu, Dario Amodei

- [4] Training Compute-Optimal Large Language Models - Jordan Hoffmann, Sebastian Borgeaud, Arthur Mensch, Elena Buchatskaya, Trevor Cai, Eliza Rutherford, Diego de Las Casas, Lisa Anne Hendricks, Johannes Welbl, Aidan Clark, others

- [5] Attention Is All You Need - Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N. Gomez, Łukasz Kaiser, Illia Polosukhin

- [6] Improving Language Understanding by Generative Pretraining - Alec Radford, Karthik Narasimhan, Tim Salimans, Ilya Sutskever

- [7] FlashAttention: Fast and Memory-Efficient Exact Attention with IO-Awareness - Tri Dao, Daniel Y. Fu, Stefano Ermon, Atri Rudra, Christopher Ré

- [8] GQA: Training Generalized Multi-Query Transformer Models from Multi-Head Checkpoints - Joshua Ainslie, James Lee-Thorp, Michiel de Jong, Yury Zemlyanskiy, Federico Lebrón, Sumit Sanghai

- [9] Longformer: The Long-Document Transformer - Iz Beltagy, Matthew E. Peters, Arman Cohan

- [10] RoFormer: Enhanced Transformer with Rotary Position Embedding - Jianlin Su, Yu Lu, Shengfeng Pan, Ahmed Murtadha, Bo Wen, Yunfeng Liu

- [11] Scaling data-constrained language models - Niklas Muennighoff, Alexander Rush, Boaz Barak, Teven Le Scao, Nouamane Tazi, Aleksandra Piktus, Sampo Pyysalo, Thomas Wolf, Colin A Raffel, NeurIPS 2023

- [12] Will we run out of data? Limits of LLM scaling based on human-generated data - Pablo Villalobos, Anson Ho, Jaime Sevilla, Tamay Besiroglu, Lennart Heim, Marius Hobbhahn, arXiv preprint arXiv:2211.04325 2024

- [13] The pile: An 800gb dataset of diverse text for language modeling - Leo Gao, Stella Biderman, Sid Black, Laurence Golding, Travis Hoppe, Charles Foster, Jason Phang, Horace He, Anish Thite, Noa Nabeshima, others, arXiv preprint arXiv:2101.00027 2020

- [14] RedPajama: an Open Dataset for Training Large Language Models - Maurice Weber, Daniel Y. Fu, Quentin Anthony, Yonatan Oren, Shane Adams, Anton Alexandrov, Xiaozhong Lyu, Huu Nguyen, Xiaozhe Yao, Virginia Adams, Ben Athiwaratkun, Rahul Chalamala, Kezhen Chen, Max Ryabinin, Tri Dao, Percy Liang, Christopher Ré, Irina Rish, Ce Zhang, NeurIPS Datasets and Benchmarks Track 2024

- [15] The FineWeb Datasets: Decanting the Web for the Finest Text Data at Scale - Guilherme Penedo, Hynek Kydlíček, Loubna Ben allal, Anton Lozhkov, Margaret Mitchell, Colin Raffel, Leandro Von Werra, Thomas Wolf, NeurIPS 2024

- [16] StarCoder 2 and The Stack v2: The Next Generation - Anton Lozhkov, Raymond Li, Loubna Ben Allal, Federico Cassano, Joel Lamy-Poirier, Nouamane Tazi, Ao Tang, Dmytro Pykhtar, Jiawei Liu, Yuxiang Wei, others

- [17] Deduplicating training data makes language models better - Katherine Lee, Daphne Ippolito, Andrew Nystrom, Chiyuan Zhang, Douglas Eck, Chris Callison-Burch, Nicholas Carlini, ACL 2021

- [18] Textbooks Are All You Need - Suriya Gunasekar, Yi Zhang, Jyoti Aneja, Caio César Teodoro Mendes, Allie Del Giorno, Sivakanth Gopi, Mojan Javaheripi, Piero Kauffmann, Gustavo de Rosa, Olli Saarikivi, others

- [19] TinyStories: How Small Can Language Models Be and Still Speak Coherent English? - Ronen Eldan, Yuanzhi Li

- [20] Measuring Massive Multitask Language Understanding - Dan Hendrycks, Collin Burns, Steven Basart, Andy Zou, Mantas Mazeika, Dawn Song, Jacob Steinhardt, ICLR 2021

- [21] Hellaswag: Can a machine really finish your sentence? - Rowan Zellers, Ari Holtzman, Yonatan Bisk, Ali Farhadi, Yejin Choi, ACL 2019

- [22] Llm evaluators recognize and favor their own generations - Arjun Panickssery, Samuel R Bowman, Shi Feng, NeurIPS 2024

- [23] Textbooks are all you need ii: phi-1.5 technical report - Yuanzhi Li, Sébastien Bubeck, Ronen Eldan, Allie Del Giorno, Suriya Gunasekar, Yin Tat Lee, arXiv preprint arXiv:2309.05463 2023

- [24] Phi-3 technical report: A highly capable language model locally on your phone - Marah Abdin, Jyoti Aneja, Hany Awadalla, Ahmed Awadallah, Ammar Ahmad Awan, Nguyen Bach, Amit Bahree, Arash Bakhtiari, Jianmin Bao, Harkirat Behl, others, arXiv preprint arXiv:2404.14219 2024

- [25] Phi-4 technical report - Marah Abdin, Jyoti Aneja, Harkirat Behl, Sébastien Bubeck, Ronen Eldan, Suriya Gunasekar, Michael Harrison, Russell J Hewett, Mojan Javaheripi, Piero Kauffmann, others, arXiv preprint arXiv:2412.08905 2024

- [26] The llama 3 herd of models - Abhimanyu Dubey, Abhinav Jauhri, Abhinav Pandey, Abhishek Kadian, Ahmad Al-Dahle, Aiesha Letman, Akhil Mathur, Alan Schelten, Amy Yang, Angela Fan, others, arXiv preprint arXiv:2407.21783 2024

- [27] Codegen: An open large language model for code with multi-turn program synthesis - Erik Nijkamp, Bo Pang, Hiroaki Hayashi, Lifu Tu, Huan Wang, Yingbo Zhou, Silvio Savarese, Caiming Xiong, arXiv preprint arXiv:2203.13474 2022

- [28] SmolLM - blazingly fast and remarkably powerful - Loubna Ben Allal, Anton Lozhkov, Elie Bakouch, Leandro von Werra, Thomas Wolf, 2024

- [29] Arctic-Embed: Scalable, Efficient, and Accurate Text Embedding Models - Luke Merrick, Danmei Xu, Gaurav Nuti, Daniel Campos, 2024